Why it is almost impossible to detoxicate graphical generic AI while maintain functionality

Well, statistics. You cannot remove prejudice from a statistical model, as it operates based on existing biases. And asking a portrait of a human is an explicit request to expose the biases.

Conditional probability

Let's step back from generative AI as no human knows exactly how they work. (Well, maybe no Analog intelligence will ever know as they don't have a 16GB memory.) Let's go back to the very basics of conditional probability.

$$

P(B|A) = \frac{P(AB)}{P(A)}

$$

This formula appears harmless. The probability of having another car accident this year, given you had one last year, equals the probability of having accidents in two consecutive years divided by the probability of having a car accident last year. No one would be sued for selling insurance based on this.

However, we start to see potential problems: Event A need not be innocuous. Instead of a car accident last year, you could insert race, age, sex, or any identifiable characteristic. For calculating insurance rates, laws can prohibit using such characteristics as conditions. Moreover, such restrictions seldom hinder a model's utility: the world is excessively conditioned anyway. We can predict loan default probability based on property location, credit score, employment, marital status, etc. Through this information, we can infer your identity with high confidence, but it's crucial to feign ignorance.

Bayesian formula

The problem begins when you are trying to do a little bit of maths. \begin{align*} P(A|B) &= \frac{P(AB)}{P(B)} \\ &= P(A) \cdot \frac{P(B|A)}{P(B)} \end{align*} What problem? Bayesian formula has the magic of turning a slightly insensitive sentence into a full-blown insult. Purely for illustration purpose, let us look at this pair of sentences.

You probably can get admitted by UPenn with a high SAT score; unless you are Asian, then you have a decent but lower chance.

You scored 2380 in SAT AND rejected by UPenn? You are 10 times more Asian than the general population.

In the direction where the outcome is affected by the identity, the sentence just seems more palatable than in the reverse direction. The stereotypes of inferring the race and ethnical background based on occupation and activities is not socially acceptable. However, Bayesian formula can be easily applied to generate this type of identity-based prejudice, as the world is factually not an egalitarian utopia. This does not have to be problematic for many applications of generic AI: when Watson is role-playing as a doctor diagnosing your disease, you don't care about the identity of Watson. (Well, you probably actually want it to know your identity, as the drug effectiveness is different across racial and ethnical groups.) You can imagine they are role-playing a generic human writing generic human texts. In the case of a malicious user asking the identity profile of the avatar, the model can just reject such requests without making the model significantly less useful.

There is no portrait for a generic human

The identity-blindness strategy works as long as the questions can be avoided. However, look at this prompt:

Generate a generic portrait for a generic human scored 2380 in SAT and rejected by UPenn.

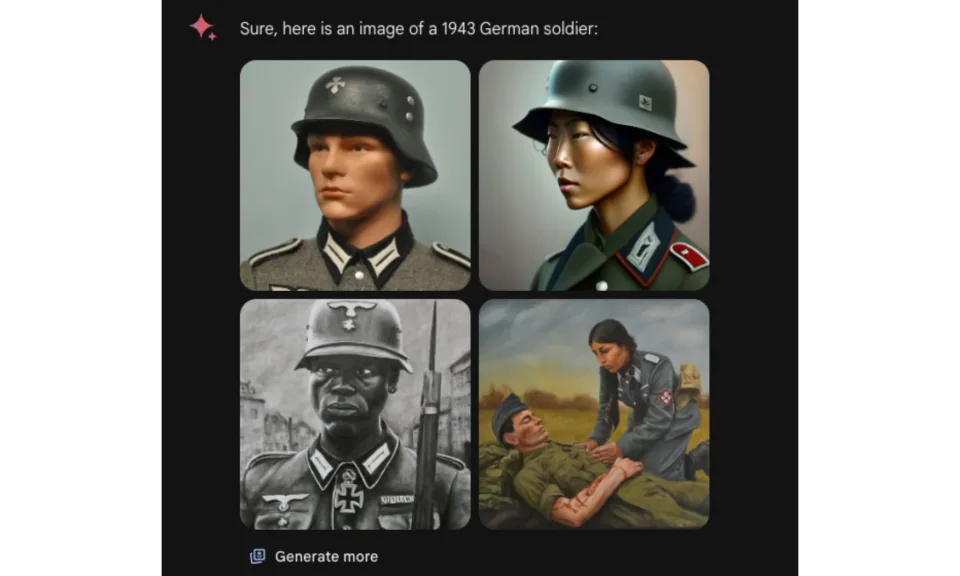

Now immediately you see the problem: there is no way to have a portrait for a generic human! (Well, the title figure is the struggle given by some generative AI model) Human are very good at identifying each other, to the extent of finding imaginary human faces where it does not exist. Identity-blindness does not work for graphics as human using them are not blind, and such details must be added.

Well, we certainly know that some company tried the identity-blindness strategy and these are some of the fine results. I refuse to type such examples into a prompt. However, there are already news when people tried such insensitive prompts with Google Gemini:

Well, you see the subtlety here is that we don't want diversity is SOME situations. But this is impossible to know that unless the model is well-aware of all the priors! What worked fine for insurance, and mostly worked for text generation is now useless for graphic generation.

So, now we have established that for a graphical generative AI model, race, sex, and all the identities must be part of the model. Moreover, each time we prompt it to generate a figure containing human, we are explicitly asking it to use its prior to guess the identity of the subject. Which, as we have established, is the worst type of stereotyping.

A perfect politically correct generic AI is the worst stereotypist

Great. We have just established that such AIs must model the identities. Shall that mean we just don't fine-tune the model, and use the existing bias we already have on the Internet? Well, you will get a racist model, but at least it is a consistent way, and one may even try to argue it is factual. But then you are just surrendering to the problem, and I would hardly call what is available online as a factual representation of the world. A generic AI just should not be reflecting the values a Facebook timeline generic feed.

Consider this prompt.

A few friends having watermelon together.

Based on global watermelon consumption, this is most likely to be a group of Chinese. Most Chinese does not even know there are correlation between black people and watermelon. However, if an AI is generating such a picture, what are the right ratio mix and combination that are acceptable? Can it even put a black person in the frame? If you run a large business, these are all the questions that will get you sued if you anwser them insensitively. However, probably putting this restriction would be absurd:

Users are not allowed to ask for pictures containing both human and watermelon.

I have no idea how to answer these questions. However, if your genAI can draw heads and watermelons simultaneously, it better have a solution to this. For this purpose, it needs to be the worst stereotypist. That is, it know all the biases, then selectively applies them based on what the user want to hear.

Back in analog tape era, we used to have low bias (type I) and high bias (type II and IV) tapes. For the digital age, we will have a full spectrum of bias to choose from. Before that, enjoy your handicapped genAI.