AIDS III: How to disprove freedom of will

Foreword

This would be the final stage in the AIDS (Analog Intelligence soft Deprecation feasibility Study) series. In principle, freedom of will, consciousness, or sentience are not prerequisites of intelligence, and the discussion to follow would be tangential to our effort to advance digital intelligence. However, there are plenty of arguments and fights on whether the state-of-art LLMs are sentient as if being sentient is a prerequisite for intelligence. Additionally, it would seem that sentience is required to migrate an analog intelligence into the digital realm.

To that, I say humans are too subjective and arrogant to accept the fact that the freedom of will is simply incompatible with the physical world. Let me try my best to disillusionize the freedom of will in various ways. Our sentience is a helpless observer of the world at best.

Where could freedom of will live in a feedforward?

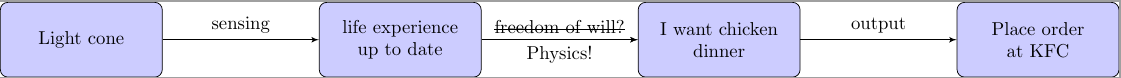

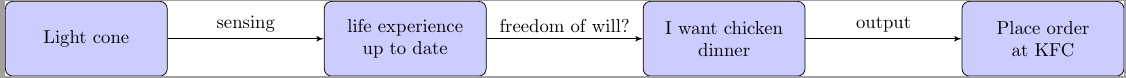

Consider the simplest case where freedom of will could exist: an analog intelligence looking at an animal picture and realizing it contains a dog. If freedom of will is involved in this process, then it could only exist in the jump from sentience to a decision. Well, I know you would argue this scenario is too limiting, but please bear with me here.

Artificial Intelligence of today offers only an allegory

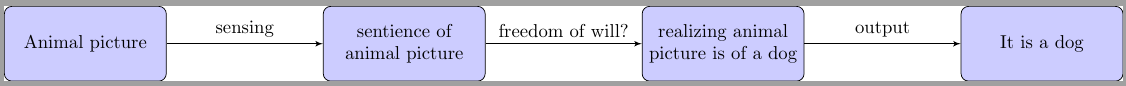

Today, when people are thinking in the scope of artificial intelligence, the argument can be represented by the figure above. It would seem that freedom of will is just some form of computation, and nothing mystic. Indeed, we used this argument that if digital intelligence can make the same decisions as analog intelligence, then they are the same.

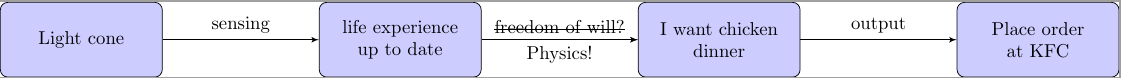

But this argument does not work for freedom of will, or sentience. Intelligence can be defined by its consequences, but sentience is just a feeling. Plus, how do you explain this flow chart, which probably fits into the freedom of will context better than the initial task?

It's just physics

In principle, that is easy to explain: everything that happens within your brain can be explained by physics, which does not have a place for freedom of will. Is it a sound explanation? To me, this is sufficient; however, we should try to give more effort into convincing humans.

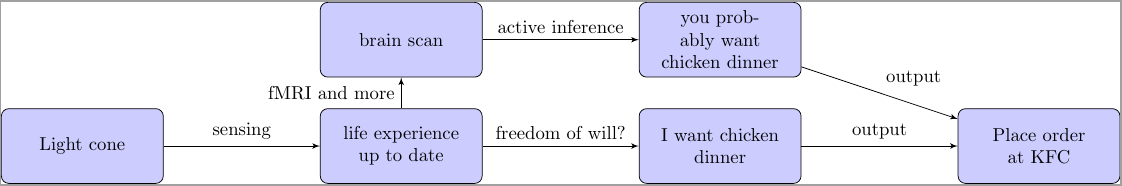

Computational psychiatry: active inference

While psychiatry has been controversial since its inception, nonetheless a lot of progress is made in this field. Computational psychiatry has made great progress, and may hold the key to offering straightforward disproof of freedom of will as follows:

Clearly, if we have a Bayesian, or dynamical model that can reliably predict the decision one makes based on the inputs, then freedom of will would have nowhere to hide. The question is, how close are we to this goal? We are not there, but we are also not very far away from it: In 2010, we can already associate which segment of movie people are watching based on their fMRI results. There is also a recent result reconstructing images using stable diffusion seen by a subject using fMRI scans with astonishing results. Starting from here, the active inference part would not be too hard if one does not stick to the biological resemblance to a brain.

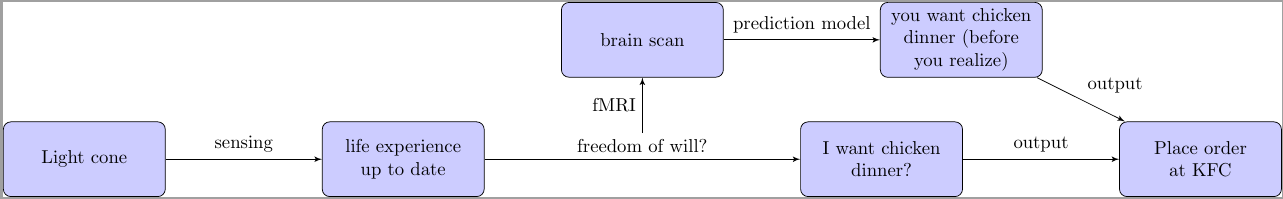

Predict decisions before you know it

There is also a more classical work where people can predict a free decision a test subject will make in the next few seconds, just by looking at fMRI. The free decisions a person made is not free at all at the time a person realizes it.

This result obviously would not apply to all decisions a person made (since obviously, you would not recognize an animal picture containing a dog a few seconds before you have seen it). But, for a class of decisions where people would otherwise believe the most convincing to show that people have freedom of will (as in, making unconstrained decisions apparently decoupled from recent inputs), the results are the exact opposite.

Conclusion

With all these experiment-backed arguments, I hope that I have convinced you that sentience does not play a role in controlling the physical world. It is the other way around: by observing the world more carefully, both the feeling of a person and the decision it (well, now you see this is the only appropriate third-person pronoun for a materialist) will make can be predicted with some precision.

Good night.